Episodes

Saturday Jul 12, 2025

Saturday Jul 12, 2025

The Nuremberg moment happened in 1945: a group of countries came together to create the first-ever international criminal tribunal with jurisdiction over crimes against humanity and war crimes.

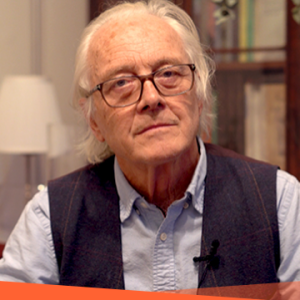

About Philippe Sands"I’m Professor of Law at University College London, a Barrister at Matrix Chambers and a writer."

Key Points

• The Nuremberg moment happened in 1945: a group of countries came together to create the first-ever international criminal tribunal with jurisdiction over crimes against humanity and war crimes.• What followed Nuremberg was the creation of new international treaties preventing genocide, and the coining of the idea of human rights – that each of us has individual rights and collective rights.• We are in the very early stages of a change that will take many decades, if not centuries, to bear full fruition. The birth of international law

The Nuremberg moment happened in 1945: a group of countries came together to create the first-ever international criminal tribunal with jurisdiction over crimes against humanity and war crimes. It was the famous Nuremberg tribunal, and it sat for about a year in the German city of Nuremberg, trying a number of senior Nazis for crimes committed during their rule.

What the Nuremberg moment did was determine, for the first time in human history, that the rights of a state, of a sovereign, of a king, of an emperor, of a president, of a Führer were not unlimited in respect of the power it exercised over its people – that individuals and groups had rights. These rights were encapsulated in two new inventions from the summer of 1945. One was the concept of crimes against humanity – the protection of individuals – which was coined by a professor of international law at Cambridge University called Hersch Lauterpacht. The other was the crime of genocide, invented by another Polish lawyer, Raphael Lemkin. This was not about individuals, but about the protection of groups. In this Nuremberg moment, in 1945, the idea that we all have rights as individuals and as members of a group, or groups, came of age, and it has prospered ever since.

Friday Jul 11, 2025

Friday Jul 11, 2025

Helena Kennedy, barrister at the English bar, member of the House of Lords and head of the IBAHRI, talks us through gender bias in law.

About Helena Kennedy"I’m a barrister, a member of the House of Lords and the head of the International Bar Association’s Human Rights Institute. I am a founding Member of the Bonavero Institute of Human Rights in the University of Oxford."

Key Points

• Women everywhere make up a very small percentage of those who come before the court. Women on the whole are less inclined to criminal activity.• In every country, the impunity that there is for violence against women is still a very serious problem.• It’s only been in comparatively recent times that we’ve started to challenge the assumption that women will lie about matters to do with sex.• The way to address inequality is to bring it into curriculums, particularly in our law schools, and to make sure that we have a much more diverse judiciary in common law systems.

The law operates a very real fiction everywhere: that the law is neutral and will apply fairly to everybody. Of course, we know that isn’t true. It certainly isn’t true in class terms but it’s also not true in relation to women. The law is male. The nature of law is that it’s been created by the powerful in society and, until comparatively recently, that did not mean women. Law in our nations has often come out of religion and the elders, priests, bishops and those who have created the laws of our church – and the basis on which societies work was created by men.

Law has been a product of a male experience of the world and as such it often fails women. I saw that when I started practising as a criminal lawyer and started putting the spotlight on how the law was actually affecting the women I represented. Women everywhere make up a very small percentage of those who come before the court. Women on the whole are less inclined to criminal activity.

Thursday Jul 10, 2025

Thursday Jul 10, 2025

Samuel Moyn, Chancellor Kent Professor of Law and History at Yale University, examines the development of human rights.

About Samuel Moyn"I am Chancellor Kent Professor of Law and History at Yale University."

Key Points

• The modern concept of the “Rights of Man” is born out of the French and American revolutions.• The first declarations of rights founded new states, but then moved to rights of women, people of ethnic backgrounds and rights to work, health and basic income.• In the 1960s and 1970s, international movements that demanded human rights rekindled socialist ideals.

The history of human rights is a drama with two acts. The first act, after the idea of human rights came about in moral philosophy books, is the act about revolution and revolutionary rights, and it is associated in the first instance with the Atlantic revolutions: the American in 1776 and the French in 1789. There’s just no doubt that rights were announced, and indeed they were announced as “natural” in the American case and “human” in the French. In fact, in English, it was due to the French Revolution that one of the instigators of the American Revolution really became one of the first to use the phrase “Rights of Man” when he translated some of the slogans of the French Revolution. If we just think for a minute about the nature of those originally revolutionary rights, we’ll see how different they were from later rights. First of all, they were deployed with a purpose. Their purpose was for people citing their human rights to justify the political renovation of their countries and their redefinition of the nature of their citizenship. In the American case, it was about secession from an empire and the creation of the first post-colonial nation. In the French case, it was about creating first a constitutional monarchy, and then a republic.

Wednesday Jul 09, 2025

Wednesday Jul 09, 2025

Hugh Brody, Honorary Associate at the Scott Polar Research Institute at the University of Cambridge, explores the role of the anthropologist.

About Hugh Brody"Hugh Brody, Honorary Associate at the Scott Polar Research Institute at the University of Cambridge, explores the role of the anthropologist."

Key Points

• After the N|uu language was thought to be extinct, one of the last remaining people to speak it was eager to share her stories and send a message to Nelson Mandela.• Acknowledging the suffering, brutality and trauma of indigenous and minority groups in the imperialist process is one significant goal of anthropology.• Ultimately, industrial societies have a lot to learn from indigenous peoples, and their loss is humanity’s loss.

In the 1990s, I began work on a mapping project with the people known as the Khomani, with the San or the Bushmen, of South Africa – people who lived on the border of South Africa, Namibia and Botswana. But it’s the community in South Africa that I was working with, and we were attempting to map with them their relationship to their world. Along the way we realised it would be tremendously helpful if we could find people who spoke the original language of that community, the language N|uu, which had been declared extinct 20 years before by very thorough scholarly academics in South Africa.

To our amazement, we heard that there was one person alive who did speak this language, a woman called Elsie Vaalbooi. I went with our colleagues, our team, including Elsie’s son, to visit her. She lived in a shack. She lived sort of under a lean-to at the side of a little shack in a dusty, dry zone on the right, in South Africa, very close to the Namibian border in the Northern Cape. And there she lay on a sort of pallet, very old, very frail.

She turned out to be about 100 years old at the time, not able to see very well. But when she heard that we had come to talk to her about her language, she was very, very excited and immediately started speaking in this language, which we thought to be extinct, and we quickly identified that it was indeed the N|uu that had been recorded in the 1930s. She told words and she began to tell stories in the language. After a while she was getting tired, but she said she wanted to record a message to Nelson Mandela.

This was 1997, not long after the ANC and Mandela had come to power.

Tuesday Jul 08, 2025

Tuesday Jul 08, 2025

A society of equals is one where no one looks up to anyone and no one looks down on anyone. No one is exploited, no one is dominated and no one is subjected to violence.

About Jonathan Wolff"I’m the Alfred Landecker Professor of Values and Public Policy at the Blavatnik School of Government in the University of Oxford.

I’m a political philosopher, and my research is largely about making connections between political philosophy on the one hand, and central questions in public and social policy on the other hand."

Key Points

• To judge the justice of our society, we should look at the wealth and income of the worst-off, and see if their wealth can be increased.• A society of equals is not so much about the distribution of resources but the way in which we relate to one another and the many ways in which we regard one another.• The alternative social model of disability says that we should change the world so that more people can fit into it rather than trying to change people to fit our world.

One of the main themes in my work over the years has been thinking about equality and inequality from the point of view of distributive justice. Coming from a well-meaning, middle-class, left-wing family, I always thought that great inequality was unjust, particularly the inequality of wealth. When I got to university as an undergraduate, I was pleased that this was one of the topics that I would be studying, just a few years after John Rawls had published his famous Theory of Justice. He argued that a just society is one where the worst-off are made as well off as possible. To judge the justice of our society, we should look at the wealth and income of the worst-off. We should see if the wealth of the worst-off can be increased without others becoming poorer than the worst-off people were before. In other words, we judge the justice of a society by how it treats those who are worst off.

This is a very appealing view, but it does allow inequalities. It says that if we need inequality to make the worst-off better off, we should accept that. Some people on the left argued that it is not right and that we should aim for greater equality, even if it means that everyone suffers. This debate continues. Should we allow incentives for the rich, and they would bring the poor with them – a rising tide raises all boats – or should we say no: what’s important is income equality, even if that makes everyone a little bit worse off than they might have been otherwise?

Tuesday Jul 08, 2025

Tuesday Jul 08, 2025

Eva Hoffman, Visiting Professor at University College London, discusses what lessons, if any, we have learned from the Holocaust and other genocides.

About Eva Hoffman"I’m a Visiting Professor at the European Institute of University College London. I write about the aftermaths of difficult history, democracy and human time.

I was born in Poland two months after World War II ended. My parents survived the Holocaust in hiding in the Ukraine. I have written about living in the second language, about the long aftermath of traumatic histories, about the transition to democracy in Eastern Europe after 1989 and about the patterns of human time."

Key Points

• Allowing the victim to confront the perpetrator is a prime example of learning to deal with the aftermath of genocides better.• Mutual recognition, dialogue and the creation of strong solidarity are vital to help prevent genocides.• Our best hope of preventing genocides may be by strengthening liberal democracies which have been weakened in the last decades.

"Never again"The question that arises from the study of the Nazi genocide and the genocides which have come after is whether we have learned anything from them. The second generation’s temporal position allows it not only to delve into the historical context and the historical causes of the Holocaust but also to know what has happened in the 70 years since then. And unfortunately, as we know, the motto “never again” has not been realised. We have seen a number of genocides and other forms of gratuitous violence like the awful phenomenon of apartheid in South Africa..

research explained, academic insights, expert voices, university knowledge, public scholarship, critical thinking, world events explained, humanities decoded, social issues explored, science for citizens, open access education, informed debates, big ideas, how the world works, deep dives, scholarly storytelling, learn something new, global challenges, trusted knowledge, EXPeditions platform

Friday Jun 27, 2025

Friday Jun 27, 2025

Jim Secord, Director of the Darwin Correspondence Project, University of Cambridge, explains how perception of Darwin has evolved.

About Jim Secord

"I’m the Director of the Darwin Correspondence Project in the University Library at Cambridge University.

My research is on public debates about science in the 18th and 19th centuries. I’ve written particularly about Victorian evolution and debates about the problem of species and where we come from. I’ve also written about the reception of evolutionary works before Darwin published his Origin of Species."

Key Points

• Although Darwin was an eminent scientist in his day, his ideas fell out of favour following his death. Their significance, however, was rediscovered throughout the 1920s and 1930s.• Darwin was not a Christian; however, he did not consider his theory of natural selection as opposed to the concept of God.• Darwin’s writings on religious issues were often thoughtful and nuanced. This ambiguity has led many to misinterpret him, especially concerning the common misconception of his atheism.• Darwin avidly engaged in correspondence with various scientists and thinkers from around the world. His numerous letters are particularly telling of his character.

Darwin was, of course, very famous for the Victorians. Yet, in the decades after his death, and particularly in the early 20th century, his reputation and the number of references to him begin to decline. There’s a big celebration, in fact, here at Christ’s College of the centennial of his birth in 1909. At that time, people talked about being at Darwin’s deathbed or Darwin somehow being irrelevant.

It was only in the late 1920s and 1930s that Darwin’s reputation begins to pick up. There are two reasons for this. One, the Victorians start to be revived and seen as significant figures. The other reason, however, is quite detailed. Darwin’s theory had undergone what many people called an eclipse in the life sciences, particularly in evolutionary biology. People thought that he had introduced a scientific way of thinking about evolution. Yet, they didn’t necessarily accept that natural selection was the right mechanism. Darwin became like Jean-Baptiste Lamarck and many authors of his time. He was simply seen as someone who helped put evolution on the map.

However, in the 1920s and 1930s, new work combined Mendelian genetics with a statistical view of populations of animals and other works in natural history and classification. All of these ideas were combined in a new view, which suggested that natural selection was, in fact, probably even more important than Darwin expected.

Friday Jun 27, 2025

Friday Jun 27, 2025

Peter Girguis, Professor of Organismic and Evolutionary Biology at Harvard University, examines microbes, life and its origins.

About Peter Girguis

"I’m a Professor of Organismic and Evolutionary Biology at Harvard University.

My research focuses on the deep sea and the relationship that animals and microbes have to one another, but also to their environment. We do a lot of work developing new tools to make measurements that we couldn’t make before. I do so with an eye towards democratising science, with a hope that all have an opportunity to study the deep ocean."

Key Points

• Microbes harness energy in ways animals can’t; life exists and Earth is habitable thanks to their ability to create oxygen, nitrogen and the conditions that animals need to survive.• Scientists gain insights to life’s origins by observing life now and making deductions, but they have to be careful not to project today’s conditions onto the past.• Microbiology may help us understand the relationship between the living and the non-living worlds.

When we think about life on Earth, we often immediately think of the organisms we’re familiar with, including us. What does it mean to be alive on Earth? Well, it’s humans, cats, dogs or birds – things that we have experiences with every day. Really, the diversity of life on Earth is in so many ways mind-blowing. There are animals that live such different lifestyles from us. Some of the fish that live in the deep ocean, for example, never see the light of day. In fact, they never touch a surface. They’re floating in the mid-water their entire lives, they have organs that allow them to make their own light. But even more extreme are microbes. Microbes are capable of doing things that we cannot possibly ever pull off as an animal.

Thursday Jun 26, 2025

Thursday Jun 26, 2025

Buzz Baum, Cell Biologist at the MRC Laboratory of Molecular Biology in Cambridge, explains the beginnings of life on Earth.

Key Points

• Darwin hypothesised that all living organisms are branches on a tree and that there is one single trunk to life on Earth.• Two partners gave rise to all complex cells: bacteria and archaea. We are all composite organisms – a mixture of bacterial genes and archaea organisms.• Many aspects of archaeal biology are very similar to our biology. So, although we’re separated by billions of years, if you look closely, these tiny cells share a lot of biology with us.

The Tree of LifeLike many people, I’ve always been interested in nature, in the beautiful creatures around us, when we go walking in a forest or when we see all these different creatures. For most of human history, people didn’t really ask whether there were common ancestors. The first person to wonder whether, despite all the diversity of life on Earth, there are any commonalities, some sort of common relationships, was Charles Darwin.

In his notebooks, he had a picture of a tree, and he used this metaphor that all living organisms are branches on the tree and they all join up and there’s one trunk. He imagined in his sketchbooks that there was this single trunk to life on Earth. And we now know that his intuition was correct. Once upon a time, there was one organism and that organism gave rise to everything on Earth. In a way, you could look at all life on Earth as one colony. So, just as each of us starts as a single cell and gives rise to a whole body, the whole of life on Earth began as one cell, and is descended from a single cell, and that cell grew and divided, and grew and divided, and gave rise to the whole of life on Earth.

Thursday Jun 26, 2025

Thursday Jun 26, 2025

Jim Secord, Director of the Darwin Correspondence Project, University of Cambridge, explains the traits Darwin thought to be fundamental to humans.

About Jim Secord "I’m the Director of the Darwin Correspondence Project in the University Library at Cambridge University.

My research is on public debates about science in the 18th and 19th centuries. I’ve written particularly about Victorian evolution and debates about the problem of species and where we come from. I’ve also written about the reception of evolutionary works before Darwin published his Origin of Species."

What it means to be human is a crucial question for Darwin. You get an interesting impression of this from his writings. If you read On the Origin of Species, for example, there’s hardly anything in it about human beings. There is one sentence where he says light will be thrown on the origin of man and its history. And there are a few other examples that involve people.

What needs to be emphasized is that the core idea of On the Origin of Species, evolution by natural selection, comes from thinking about humans and what it means to be human. It comes from the work of political economy by Thomas Malthus, An Essay on the Principle of Population. When Darwin was thinking about why some individuals survive in the struggle for existence and others don’t make it, he was thinking about people; he was thinking about you and me.

I think it’s really important to realise that throughout Darwin’s theoretical thought, this question about humanity and the idea of what it means to have a mind and be part of the natural world at the same time – this is the core of the question he’s trying to answer. We can see this in his later writings when he publishes The Descent of Man, and Selection in Relationship to Sex in 1871. This is a two-volume work, which argues in the first part that humans come from lower animals. In making this argument, Darwin shows how a whole range of different characteristics – our morals, our belief in God, our love of music – are in various ways present within lower animals.